SaaS founders face a crucial challenge in 2026: understanding AI terminology isn't just about staying current, it's about building products that can compete and scale in an AI-first marketplace. AI terminology for SaaS founders has evolved beyond basic machine learning concepts to include specialized infrastructure, governance, and implementation frameworks that directly impact product success.

This guide is designed for SaaS founders, CTOs, and product leaders who need to make informed decisions about AI integration without getting lost in technical jargon. You'll learn the exact terms and concepts that separate successful AI implementations from expensive experiments.

We'll cover essential AI terms 2026 across three critical areas that determine your product's scalability. First, you'll master technical implementation terminology, from workflow orchestration AI systems to infrastructure requirements that keep your AI features running reliably in production. Next, we'll decode business-focused concepts like vertical AI applications and data-as-a-service monetization models that can unlock new revenue streams. Finally, you'll understand AI governance enterprise compliance terminology that's becoming essential for enterprise sales cycles and customer trust.

The companies winning in 2026 aren't just adding AI features, they're building AI-ready platforms from the ground up. The language you use shapes how you think about these challenges, and the right terminology helps you ask better questions, make smarter architectural decisions, and communicate more effectively with your technical team.

Essential AI Terminology for Technical Implementation

Machine Learning Models and Training Concepts

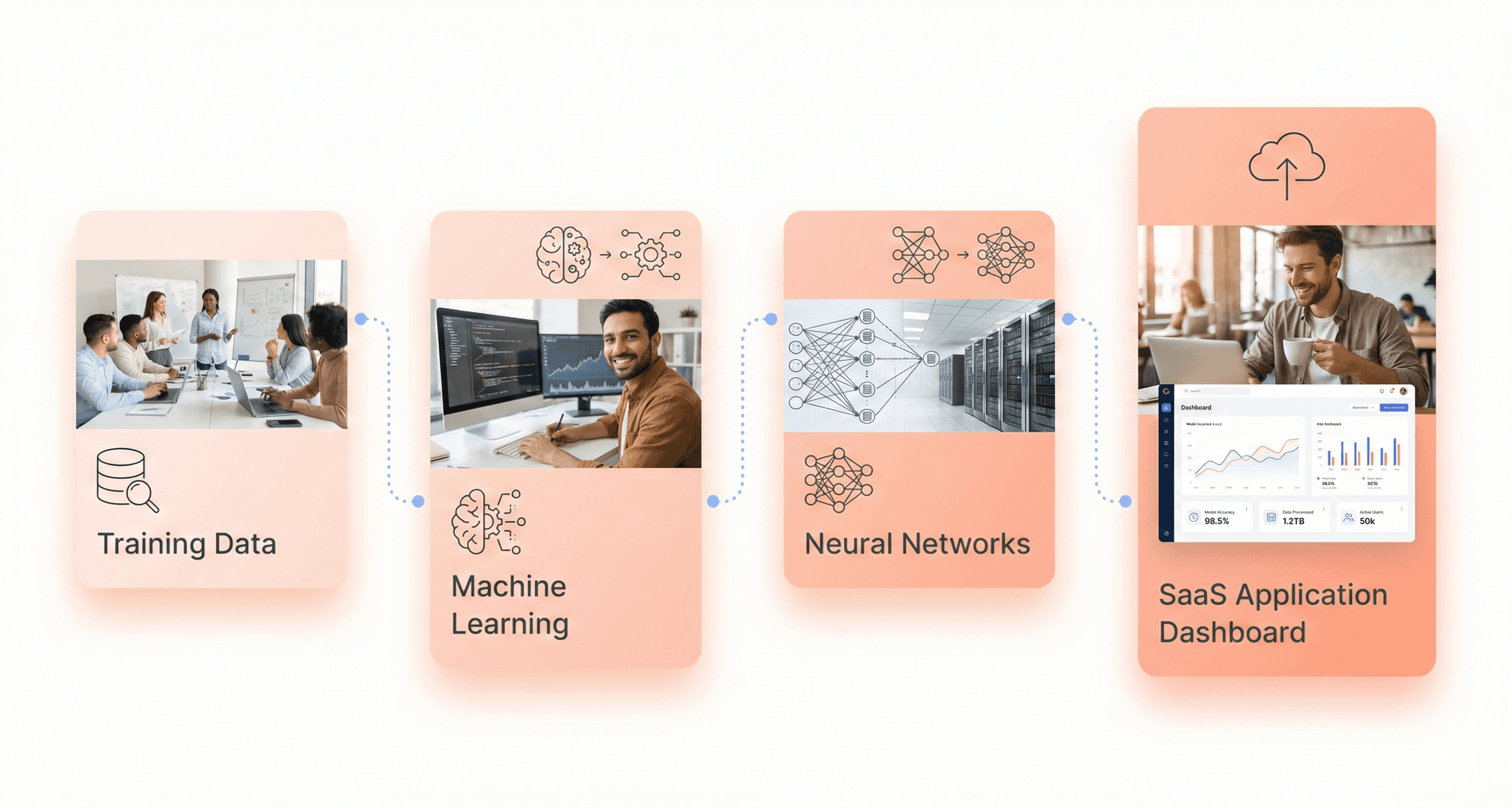

Understanding AI terminology for SaaS founders begins with grasping core machine learning concepts that power modern applications. Machine Learning (ML) represents a subset of AI where computers learn patterns from data without explicit programming for each task. This foundational technology enables systems to improve performance as they process more information, making it essential for scalable AI product development.

Deep Learning extends this concept through neural networks with multiple layers that analyze complex data patterns. These sophisticated systems power image recognition, voice assistants, and language models that can generate human-like text. For SaaS founders, deep learning capabilities enable advanced features like automated customer support, content generation, and predictive analytics.

Neural Networks function as computing systems inspired by biological neural networks, consisting of interconnected nodes that process information and learn patterns. Their power lies in recognizing complex patterns that traditional programming approaches cannot handle effectively, making them invaluable for SaaS applications requiring sophisticated data analysis.

Training Data represents the information used to teach AI systems specific tasks, with quality and quantity significantly impacting performance. For example, training an AI system to recognize customer sentiment requires thousands of labeled examples. Models serve as mathematical representations of real-world processes that make predictions about new, unseen information after training on data.

Algorithms provide the set of rules or instructions computers follow to solve problems, functioning like recipes that guide systems toward desired outcomes. Understanding these concepts enables SaaS founders to make informed decisions about AI implementation strategies.

Data Pipeline and Infrastructure Terms

Big Data encompasses large and complex datasets requiring specialized technologies for effective management and analysis. Characterized by high volume, velocity, and variety, Big Data helps companies discover hidden patterns and trends for smarter decision-making in SaaS environments.

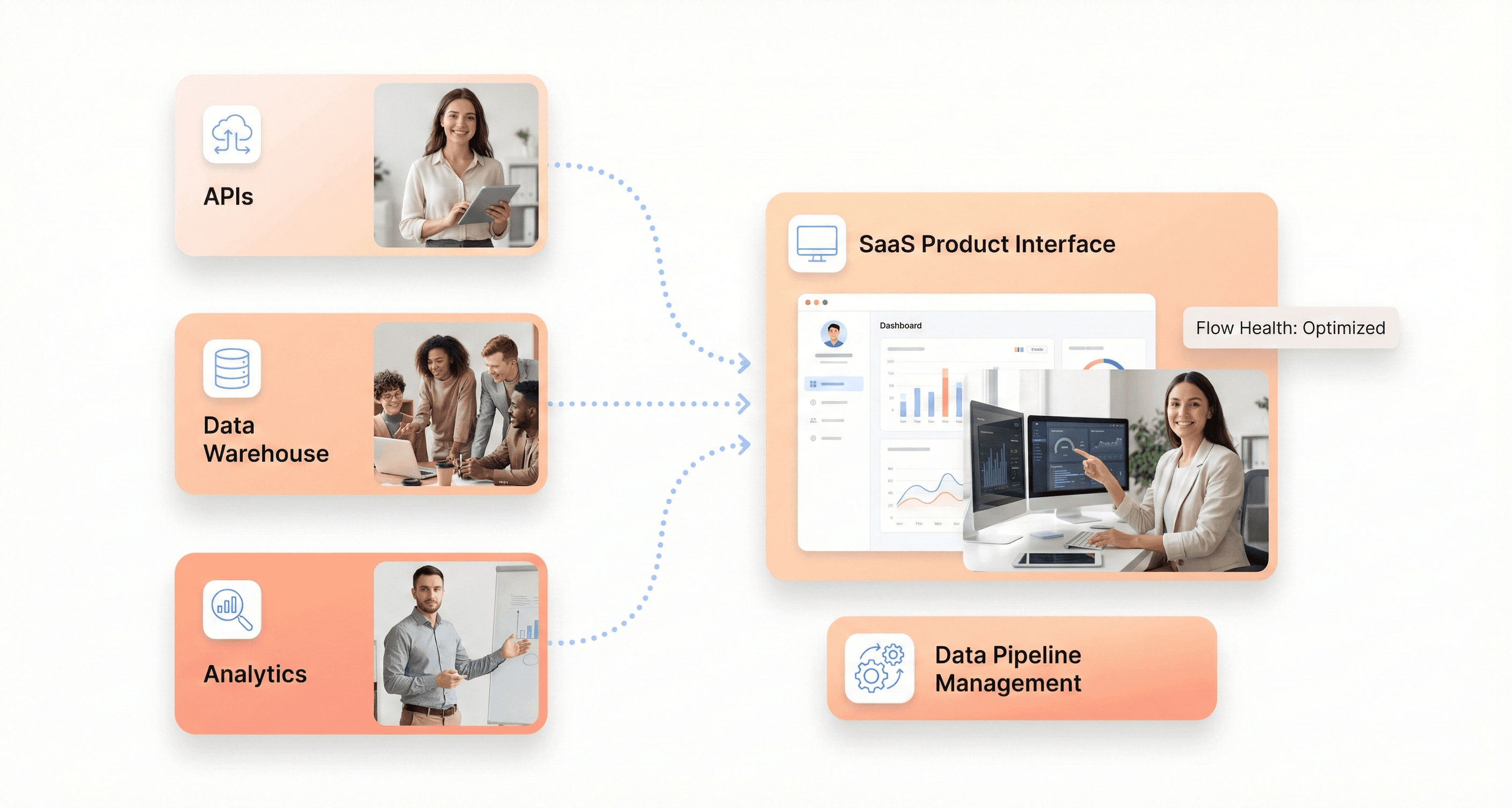

Data Warehouses serve as centralized systems designed to store, organize, and manage large volumes of data. Unlike regular databases used for daily operations, data warehouses optimize for reading and querying historical data rather than frequent updates. Organizations leverage data warehouses to support business intelligence, generate reports, and identify trends for data-driven decisions.

Data Mapping involves connecting data fields from one source to another, enabling efficient data transition and usage. This crucial step in data management tasks like migration, integration, and warehousing ensures consistency and accuracy across systems, particularly important for SaaS platforms handling multiple data sources.

APIs (Application Programming Interfaces) establish protocols enabling different software systems to exchange data. SaaS developers use APIs to facilitate communication between various components within applications or with third-party services like payment gateways and email platforms, allowing data sharing without rewriting underlying logic.

JSON (JavaScript Object Notation) provides a lightweight data interchange format that's human-readable and has become the standard for web APIs. Its structure uses key-value pairs and arrays to organize data in readable formats, making it essential for modern SaaS data pipelines.

AI Orchestration and Workflow Management

Workflow Automation utilizes technology to optimize repetitive business tasks without manual intervention. This involves setting up rules, triggers, and actions, integrating AI so software can automatically perform tasks through defined sequences. For SaaS founders, workflow automation represents a critical capability for scaling operations efficiently.

Containerization packages and runs software reliably across different computing environments. Popular tools like Docker and Kubernetes ensure applications run consistently in development, testing, and production environments. This approach proves essential for AI systems requiring consistent deployment across various infrastructure configurations.

Microservices Architecture organizes applications as collections of small, independent services, each responsible for specific business functions. This approach allows teams to develop, deploy, and scale services independently, improving flexibility and fault isolation while enabling faster delivery of AI-powered features.

Load Balancing distributes incoming network traffic evenly across multiple servers to ensure optimal performance and reliability. For AI applications handling varying workloads, load balancing maintains system stability by preventing any single server from becoming overwhelmed, crucial for maintaining consistent user experiences.

Edge Computing processes data closer to sources like IoT devices or sensors, reducing latency and improving response times. This distributed computing approach proves particularly valuable for AI applications requiring real-time processing capabilities.

MLOps and Model Deployment Vocabulary

Continuous Integration/Continuous Deployment (CI/CD) represents DevOps practices enabling teams to automate building, testing, and deploying software processes. This approach allows development teams to deliver AI model updates faster while minimizing defect risks, essential for maintaining competitive AI-powered SaaS products.

Auto-Scaling automatically adjusts resources based on application workload and demand. Cloud providers like AWS, Azure, and Google Cloud offer autoscaling tools helping organizations optimize costs while eliminating manual intervention needs by dynamically changing active server numbers based on AI processing requirements.

Fault Tolerance ensures systems remain available despite unexpected errors. This approach maintains system stability and user trust by minimizing disruptions, particularly critical for AI applications where model failures could impact user experiences or business operations.

Version Control systems track code changes over time, allowing multiple developers to work simultaneously on AI projects. Git, the most popular version control system, enables teams to see change histories, revert to previous model versions, and merge contributions safely.

Containerization specifically for AI models ensures consistent deployment across different environments, while Kubernetes orchestrates these containers automatically, handling scaling, load balancing, and recovery for AI applications. Together, these technologies simplify managing complex AI systems by automating deployment and operations tasks essential for scalable AI product development.

Understanding AI Adoption Patterns in SaaS

Technical vs Non-Technical Founder Perspectives

The divergent approaches between technical and non-technical founders create distinct AI adoption patterns within SaaS organizations. Technical founders, typically with engineering or development backgrounds, prioritize implementation-focused concerns when evaluating AI initiatives. Their primary considerations center around how AI impacts existing architecture, compute and maintenance costs, and data accessibility and control mechanisms.

In contrast, non-technical founders approach AI adoption patterns from a business outcomes perspective. They emphasize where AI creates tangible user value, how it differentiates the product in competitive markets, and express concerns about reliability or building the wrong feature. This fundamental difference in perspective shapes how teams prioritize AI in their roadmaps and influences the entire product development strategy.

Rather than creating organizational friction, these complementary viewpoints actually strengthen AI adoption patterns when properly leveraged. Technical founders ensure implementation feasibility while non-technical founders maintain focus on business value and user impact.

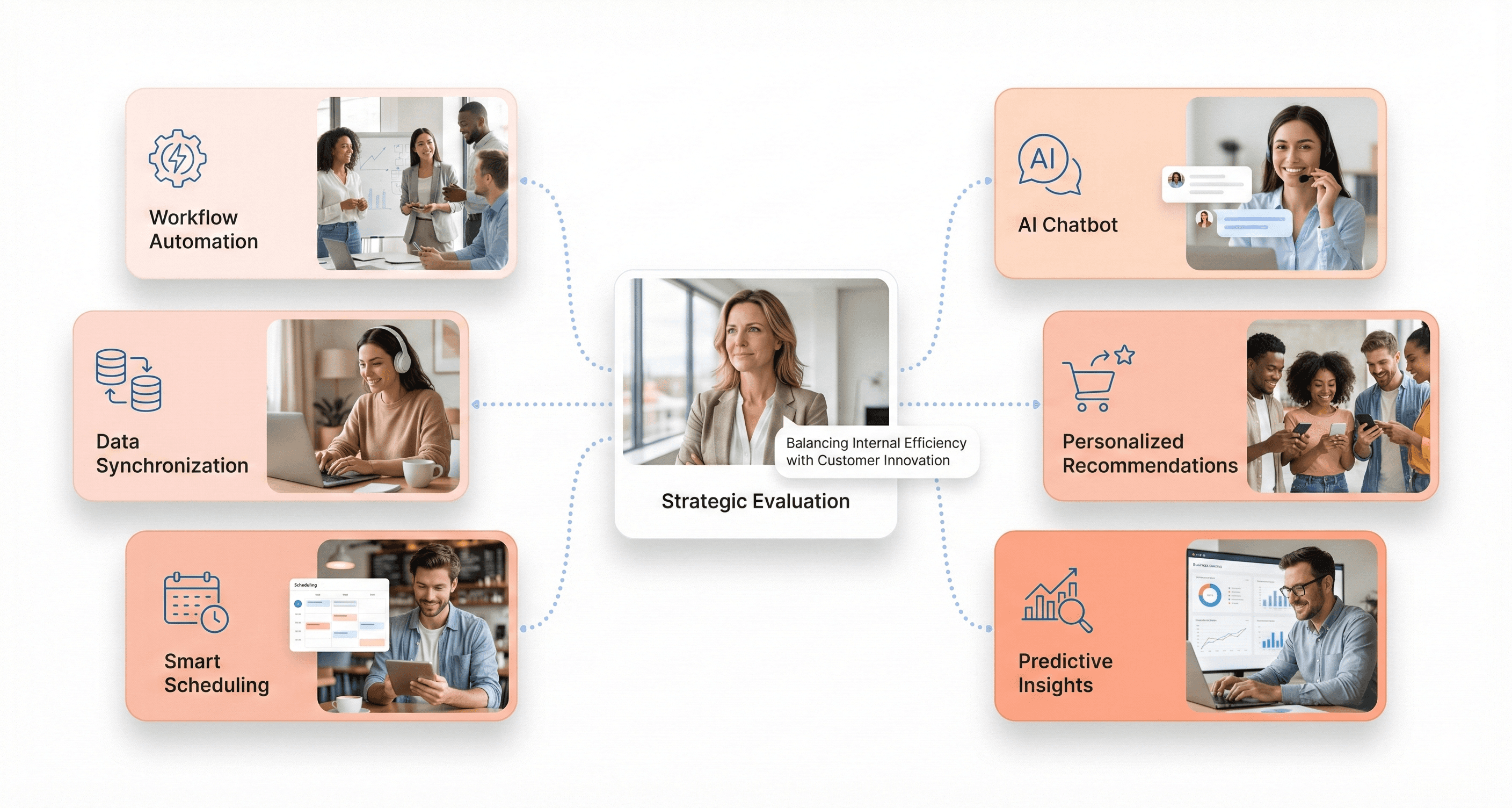

Internal Automation vs Customer-Facing Features

AI adoption patterns reveal a clear preference for internal automation over flashy customer-facing features. Founders consistently emphasize practical, efficiency-focused applications that automate manual tasks, accelerate customer value, and support more informed product decisions.

Internal automation priorities include:

Automating billing, reconciliation, and manual workflows

Supporting QA automation processes

Reducing repetitive administrative tasks

Streamlining internal decision-making

Customer-facing AI applications focus on:

AI-assisted workflows for productivity enhancement

Smarter onboarding and personalized guidance

Predictive analytics and customer insights

Context-aware recommendations and intelligent user support

This pattern reflects a growing realization that AI doesn't need to be customer-facing to generate significant value. Many impactful AI implementations happen quietly in the background, improving workflows and reducing operational drag without fundamentally changing how the product feels to users.

Validation Methods for AI Features

SaaS founders approach AI experimentation with structured methodology, prioritizing speed of learning over perfect products. Effective validation focuses on vision alignment, ensuring AI features fit with the product's core value proposition, UX clarity to make AI interactions feel obvious rather than magical, and ethical considerations that maintain user trust even during early testing phases.

Common validation approaches include:

Method | Purpose | Key Benefits |

|---|---|---|

Internal dogfooding | Catch issues early | Rapid iteration, cost-effective testing |

Limited betas | Observe real user behavior | Authentic feedback, controlled exposure |

Public iteration | Shorten feedback cycles | Faster learning, market validation |

Teams validate AI-powered ideas by measuring direct user feedback and quantifiable outcomes rather than obsessing over perfect releases. This methodology ensures AI features align with actual user needs rather than theoretical innovation.

Risk Assessment and Timing Considerations

Founders express specific concerns about AI adoption timing that influence their implementation strategies. The primary worries focus on building the wrong thing too early and wasting resources on unvalidated AI features, maintenance and scalability challenges including ongoing costs and model updates, overcomplicating the product by introducing friction or confusing user experiences, and security and data privacy considerations around sensitive data and compliance requirements.

Key timing signals that indicate readiness for AI implementation:

Clear user demand or repeated requests for specific AI-driven capabilities

Direct ties to measurable outcomes such as time saved, costs reduced, or engagement improved

Clean, reliable data with clear integration paths

AI supporting existing workflows rather than introducing entirely new ones

These risk assessment patterns suggest founders aren't resistant to AI adoption but exercise deliberate caution. They prioritize intentional AI implementation over automatic adoption, waiting for clearer signals around ROI, technical readiness, and manageable integration complexity before fully committing resources to AI initiatives.

AI Infrastructure Requirements for Scalable Products

Data Quality and Accessibility Prerequisites

When building scalable AI infrastructure for SaaS products, data quality and accessibility form the foundation of everything else. AI workloads depend on two critical storage realities: capacity for massive datasets (including training data, checkpoints, and model artifacts) and throughput for active training to prevent GPUs from idling during processing.

Data Pipeline Health Monitoring becomes essential for catching quality problems before they impact downstream systems. Key metrics include error rates, ingestion throughput, and records processed per second. Equally important is tracking data quality indicators like schema drift detection, missing value ratios, and duplicate rates that can compromise model accuracy.

Data Freshness and Volume Management requires monitoring time since last update, source latency, and staleness by dataset. Queue depth, storage growth rates, and backfill lag provide insights into system capacity and performance bottlenecks.

For distributed AI environments, data must be accessible across nodes simultaneously. This necessitates a tiered storage strategy that balances speed, scale, and cost:

Bulk storage (Object storage like S3) for raw datasets, model checkpoints, and archives

High-performance storage (NVMe SSDs) for active training workloads requiring constant data access

Caching layers to bridge between storage tiers and reduce latency

System Architecture Modernization Needs

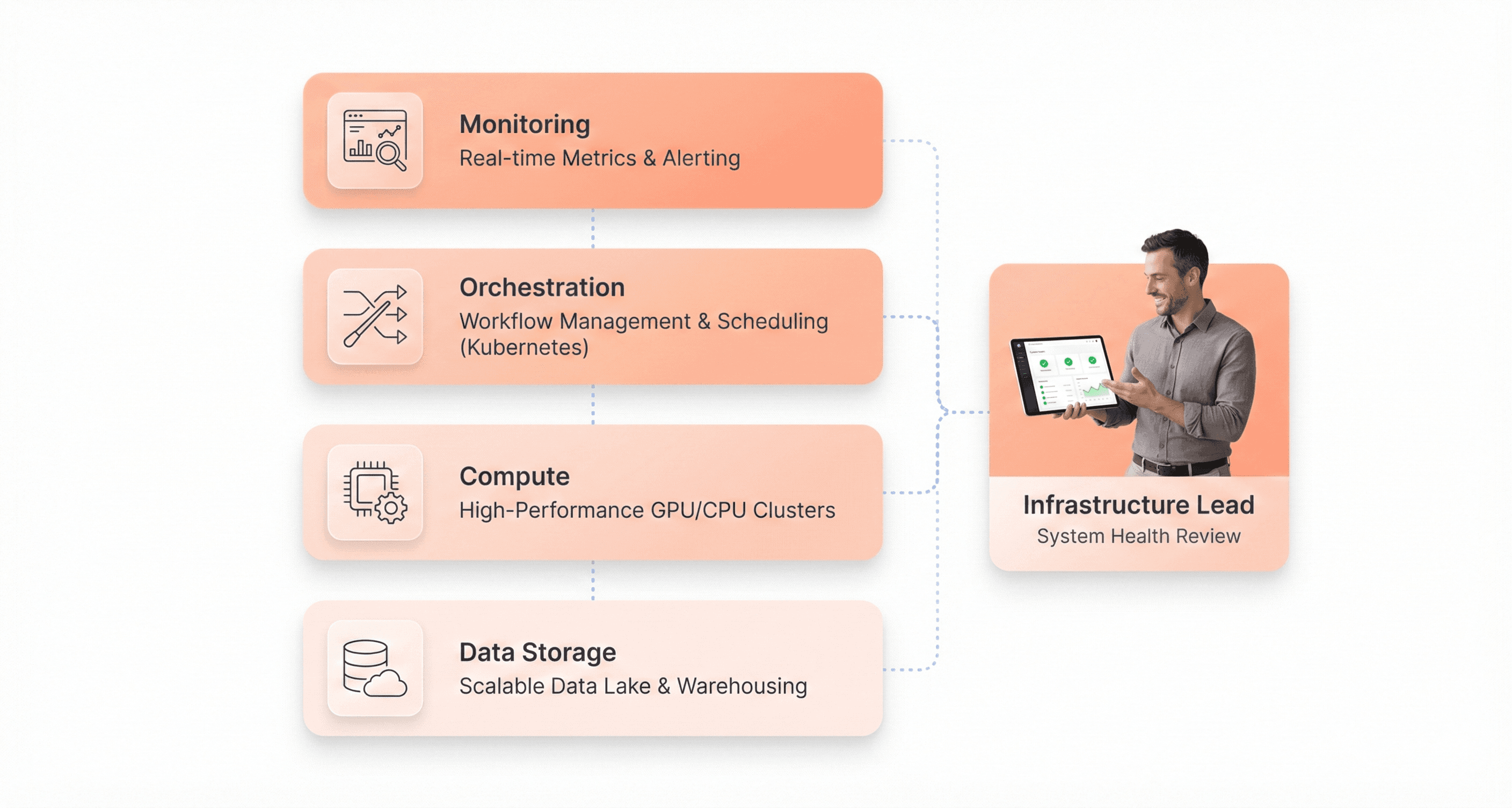

AI workloads break traditional infrastructure patterns, requiring systems that operate as coordinated units rather than collections of independent parts. The four pillars of AI infrastructure, compute, storage, networking, and orchestration, must work interdependently to handle probabilistic, resource-intensive, and distributed processing requirements.

Compute Architecture Alignment demands matching hardware to specific workload types:

Massive transformer training requires top-tier GPUs with multi-node scaling capabilities

Production inference can utilize midrange GPUs or optimized CPUs

Classical machine learning (random forests, gradient boosting) often performs adequately on CPUs at lower cost

Network Infrastructure Scaling becomes critical for distributed training where GPUs must constantly synchronize model gradients. Most cloud deployments benefit from 100+ Gbps Ethernet, while edge AI deployments require low-latency strategies with local processing capabilities for intermittent connectivity scenarios.

Orchestration and Container Management through platforms like Kubernetes has become the de facto standard. Extensions like Kubeflow, Ray, and Dask support distributed training, model serving, and workload scheduling with automated scaling and fault tolerance. These systems transform infrastructure from static hardware into adaptive, living systems that respond dynamically to changing computational demands.

Observability and Monitoring Capabilities

Traditional monitoring tools designed for static infrastructure cannot adequately track AI workloads that are probabilistic, distributed, and dynamic. Systems can appear healthy even when model accuracy drops or GPU utilization plummets, making specialized observability crucial for AI infrastructure success.

Multi-Layer Metrics Tracking requires monitoring across five key categories:

Category | Key Metrics | Purpose |

|---|---|---|

Data Processing | Pipeline health, data quality, freshness, volume | Prevent training failures |

Model Training | GPU performance, training efficiency, I/O performance | Optimize compute costs |

Inference | Latency, throughput, reliability, efficiency | Ensure user experience |

LLM/RAG Systems | Retrieval quality, generation quality, context efficiency | Maintain accuracy |

Platform-Wide | Resource saturation, orchestration health, network performance | Early warning detection |

Unified Visibility Across Hybrid Environments becomes essential when AI workloads span multiple clouds, on-premises systems, and edge locations. Effective observability correlates GPU utilization with storage latency spikes, traces model drift back to schema changes, and provides causality understanding rather than simple alert reactions.

Performance Correlation Capabilities enable teams to identify GPU or storage bottlenecks faster, track training efficiency and inference latency in real-time, control costs by monitoring underutilized resources, and maintain reliability across distributed deployments.

Security and Compliance Framework Terms

AI platforms face unique security vulnerabilities including data poisoning, model theft, inference attacks, and polymorphic malware development. These threats require specialized security frameworks that address both traditional infrastructure protection and AI-specific attack vectors.

AI-Specific Security Threats encompass several critical areas:

Data Poisoning: Malicious manipulation of training datasets to compromise model behavior

Model Theft: Unauthorized extraction of proprietary algorithms and trained models

Inference Attacks: Attempts to extract sensitive information through model queries

Polymorphic Malware: AI-generated malicious code that adapts to evade detection

Compliance and Privacy Considerations become particularly complex in AI systems due to their impact on data protection and privacy rights. Issues include informed consent challenges, surveillance concerns, and the handling of personal data in training datasets. AI infrastructure must ensure secure data handling and compliance with industry standards to minimize legal and reputational risks.

International Regulatory Alignment requires understanding policies from organizations like the United Nations, OECD, Council of Europe, and European Parliament, which acknowledge the significance of human rights in AI development and deployment. These frameworks establish guidelines for responsible AI infrastructure that protects individual privacy while enabling innovation.

Automated Security Integration through MLOps practices ensures version control for models, automated training and deployment pipelines, and continuous security monitoring throughout the AI lifecycle. This integration with DevOps security practices and CI/CD processes enables automated build, test, and deployment processes while maintaining security standards across the entire AI infrastructure stack.

Workflow Orchestration and AI Control Systems

Orchestration Platforms and Integration Tools

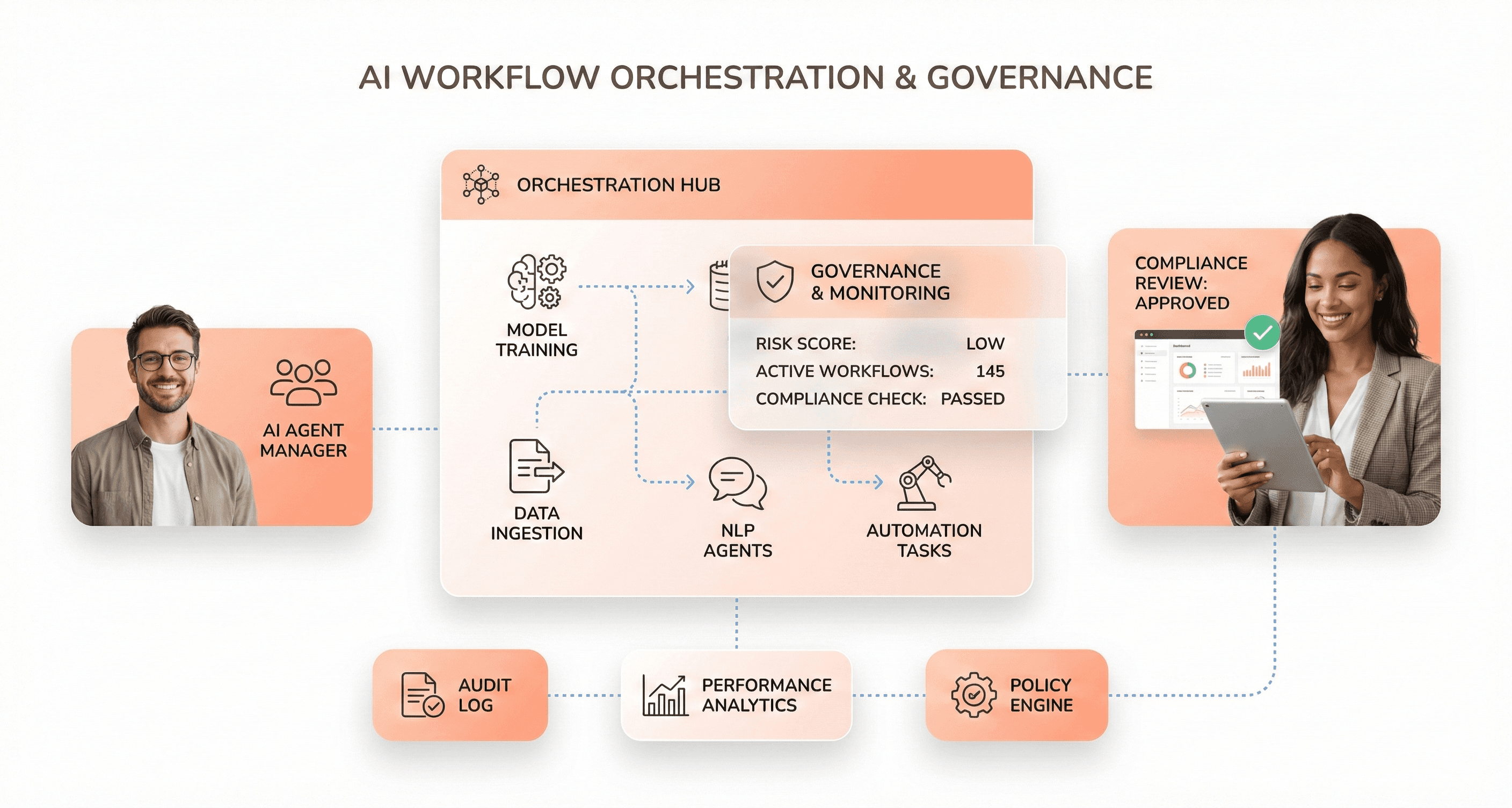

AI orchestration platforms serve as the central nervous system for coordinating artificial intelligence models, systems, and integrations across scalable SaaS products. These platforms automate AI workflows, track progress toward task completion, manage resource usage, monitor data flow and memory, and handle failure events with minimal human intervention.

Modern orchestration tools fall into two primary categories: software engineering-focused platforms and data science-oriented solutions. For SaaS founders building scalable AI products, understanding these distinctions is crucial for selecting the right infrastructure.

Developer-Centric Orchestration Tools like LangChain, CrewAI, and Microsoft AutoGen enable software engineers to integrate AI capabilities into applications through agent-based architectures and API orchestration. These platforms emphasize code integration, flexible deployment patterns, and minimal ML expertise requirements. LangChain, for instance, provides comprehensive toolkits for chaining LLM-driven tasks, data sources, and APIs into cohesive workflows, while CrewAI orchestrates collaborative multi-agent teams for complex task delegation.

Data Science Platforms such as Apache Airflow, Kubeflow, and Prefect focus on managing the entire ML lifecycle through robust data pipelines, model training workflows, and experiment tracking. These tools provide capabilities for dataset management, computational requirements handling, and reproducibility assurance from experimentation to production.

The three fundamental pillars facilitating AI workflow orchestration are AI integration, automation, and management. Integration connects AI tools, databases, and system components through automated data pipelines and real-time communication between ML models via APIs. This creates AI ecosystems that chain models together in complex workflows to autonomously fulfill high-level tasks demanding multiple specialized capabilities.

Event-Driven Architecture Components

Event-driven architecture forms the backbone of scalable AI orchestration systems, enabling reactive AI applications that respond to real-time data streams and agent decisions. This architectural pattern is particularly crucial for SaaS products requiring low-latency performance and seamless integration of multiple AI services.

Message-Driven Communication facilitates asynchronous coordination between distributed AI services. Platforms like Akka leverage the actor model to create isolated, stateful units that communicate asynchronously, making them ideal for building agent-based systems where each AI model or microservice operates independently while contributing to larger workflows.

Real-Time Data Processing capabilities ensure that AI systems can handle continuous data streams and trigger appropriate responses based on incoming events. This is essential for applications requiring immediate decision-making, such as fraud detection, recommendation engines, or dynamic pricing systems in SaaS environments.

API-First Integration enables seamless connection between various AI services and external tools. Function calling from LLMs to tools through APIs represents a critical component of modern event-driven AI architectures, allowing for dynamic workflow adaptation based on real-time requirements and user interactions.

The event-driven approach supports horizontal scaling for AI workloads, particularly important for LLM inference services and model deployment scenarios. This architecture pattern ensures that SaaS products can handle varying loads while maintaining responsiveness and system reliability across distributed AI components.

AI Execution and Governance Layers

AI execution layers manage the operational aspects of running AI systems at scale, while governance layers ensure compliance, security, and ethical AI deployment. For SaaS founders building scalable products, these layers provide essential oversight and control mechanisms for enterprise-grade AI applications.

Execution Management encompasses automated deployment of patches, updates, and new models to minimize disruptions to user experience. Orchestration platforms self-manage compute usage, prioritizing memory and resources where needed most to address urgent demands. This includes dynamic resource allocation in real-time to adapt to changing business requirements and shifting workflows.

Performance Monitoring provides ongoing oversight of AI applications throughout their entire lifecycle. Data scientists benefit from performance monitoring in data processing workflows that deliver clean, reliable data for accurate AI model results. Real-time monitoring capabilities enable organizations to track status, tweak workflows, fine-tune models for better outputs, and adjust data flows as needed.

Governance and Compliance Framework serves as the singular point of control for entire AI applications, systems, or workflows. Organizations can better ensure their AI initiatives meet legal and regulatory requirements through centralized management capabilities. This is particularly crucial for industries with strict privacy regulations, such as healthcare, finance, and legal sectors applying generative AI solutions.

Transparency and Explainability features make obscure AI systems more interpretable, providing insight into AI processes as they work. This transparency is paramount for responsible AI use in sensitive data environments and helps organizations maintain compliance with evolving AI governance standards.

Fault Tolerance and Reliability Mechanisms

Robust fault tolerance mechanisms ensure AI systems maintain operational continuity despite component failures or unexpected disruptions. These reliability features are essential for SaaS products requiring high availability and consistent performance across distributed AI infrastructure.

Automated Error Handling systems continuously monitor AI applications for errors and performance degradation, automatically addressing issues through predefined response protocols. Orchestration platforms implement supervision strategies that maintain system uptime through intelligent failure recovery and component restart procedures.

Resource Optimization mechanisms dynamically adjust computational resource allocation based on system demands and failure scenarios. When components fail, these systems automatically redistribute workloads to healthy nodes, ensuring minimal service interruption and optimal resource utilization across the AI infrastructure.

Data Pipeline Resilience ensures reliable data quality and consistent data flow throughout AI workflows, even when individual pipeline components experience issues. This includes automated backup systems, redundant data paths, and quality validation checkpoints that maintain data integrity during system failures.

Scalability and Recovery features enable rapid recovery from failures while supporting horizontal scaling requirements. Container-based AI applications benefit from Kubernetes-native orchestration tools like Flyte and Kubeflow, which provide distributed system management and efficient resource optimization ideal for cloud deployments.

These fault tolerance mechanisms collectively ensure that AI orchestration systems can handle the complexity and scale requirements of modern SaaS applications while maintaining the reliability standards expected by enterprise customers.

Vertical AI Applications and Domain-Specific Terms

Industry-Specific AI Model Training

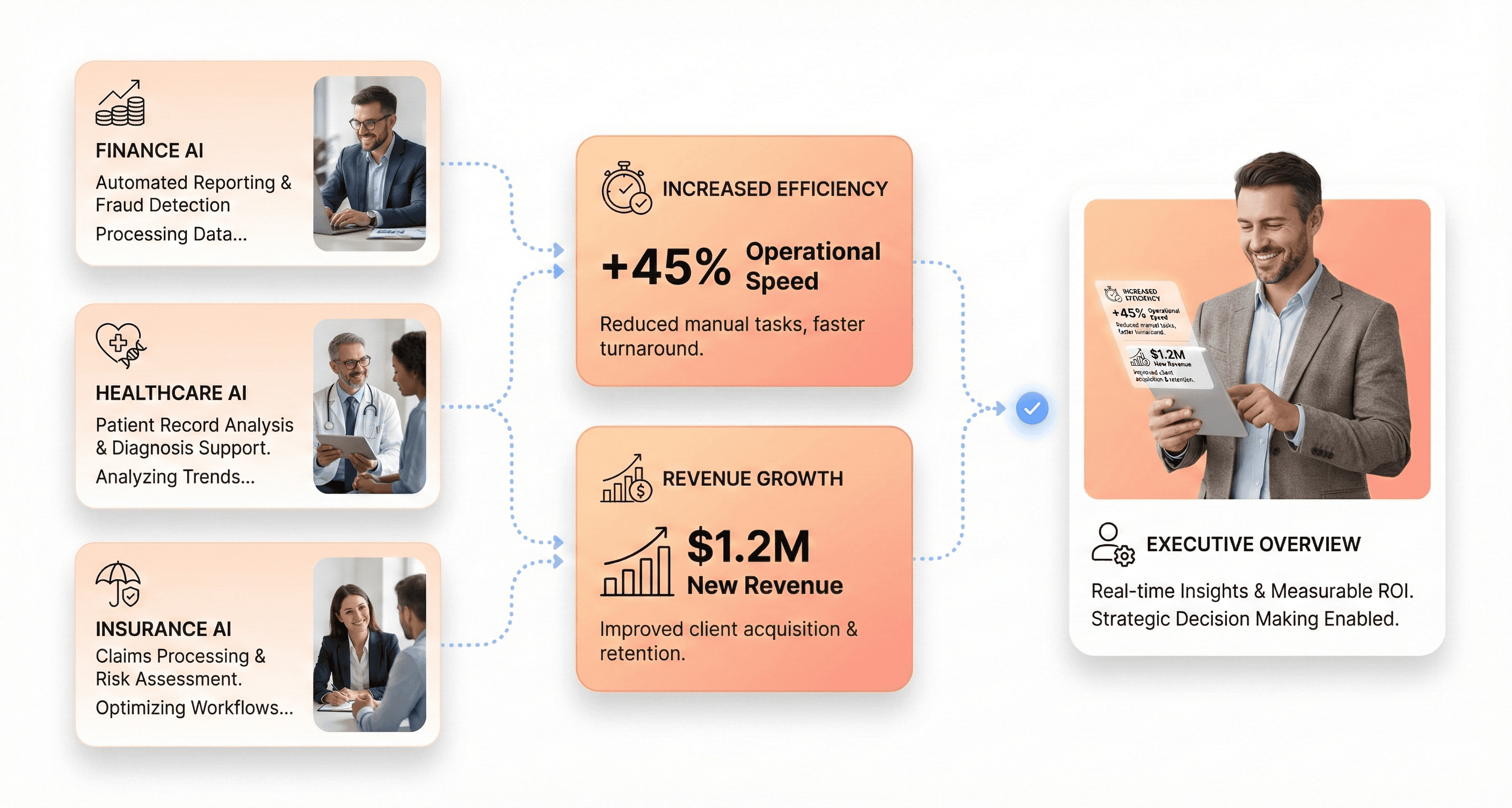

Vertical AI applications require specialized training approaches that differ fundamentally from horizontal AI development. Unlike general-purpose models trained on broad datasets, vertical AI applications utilize domain-specific data to build deep industry expertise. This training methodology focuses on proprietary and public data from a single industry, enabling models to understand specialized terminology, processes, and regulatory requirements.

The training process for vertical AI involves fine-tuning models with industry-specific datasets such as ACORD forms in insurance, medical records in healthcare, or financial filings in banking. This specialized approach allows AI implementation for SaaS products to deliver higher accuracy and faster deployment for niche use cases compared to horizontal solutions that require extensive customization.

Key training characteristics include domain expertise encoding, where models learn industry-specific terminology and logic, and regulatory compliance integration, where models embed rules and protocols tailored to industry standards like SOC2 or HIPAA. This targeted training approach reduces AI hallucinations and improves decision quality by providing contextual understanding that general models often lack.

Embedded Decision Logic Terminology

Understanding embedded decision logic is crucial for SaaS founders developing vertical AI applications. These systems incorporate pre-built decision frameworks that reflect real-world industry expectations and data structures. The terminology encompasses several key concepts that differentiate vertical AI from general-purpose solutions.

Domain constraints refer to the built-in guardrails that prevent AI systems from generating outputs outside acceptable industry parameters. Compliance-aware outputs ensure that all AI-generated recommendations align with regulatory frameworks specific to each vertical. Workflow-aligned logic describes how decision systems integrate seamlessly with established business processes rather than requiring significant reconfiguration.

Regulatory alignment mechanisms represent another critical terminology area, describing how AI systems automatically account for industry-specific regulations. For instance, in insurance, these mechanisms ensure that AI recommendations consider policy exclusions, underwriting guidelines, and claims processing requirements without manual intervention.

Domain-Trained Workflow Concepts

Domain-trained workflows represent a fundamental shift from generic AI implementations to industry-specific automation. These concepts focus on scalable AI product development that addresses specialized business processes within particular verticals.

Industry-specific automation involves AI systems designed to handle specialized workflows that don't follow generic patterns. In insurance, this includes bordereaux reconciliation, claims triage, and submissions processing. Workflow integration ensures that AI solutions connect seamlessly with existing business tools and processes without disrupting established operations.

Contextual workflow understanding enables AI systems to interpret and act on industry-specific data structures and business logic. This includes understanding the nuances of real-world decisions like evaluating policy exclusions, identifying missing submission details, or surfacing trends across complex data sets.

Process-specific intelligence describes how AI systems develop expertise in particular business functions, moving beyond generic task automation to provide specialized insights that support critical business decisions within specific industry contexts.

Outcome-Driven AI Implementation

Outcome-driven AI implementation focuses on delivering measurable business value through domain-specific solutions. This approach prioritizes tangible results over general AI capabilities, ensuring that vertical AI applications provide clear return on investment for specialized use cases.

Value-driven deployment emphasizes faster implementation timelines achieved through pre-trained industry models. Unlike horizontal AI that requires extensive customization, vertical AI solutions enable quicker deployment due to their alignment with industry-specific requirements and data structures.

Performance optimization in vertical AI focuses on domain-specific metrics such as accuracy in specialized terminology interpretation, reduction in false positives for industry-specific scenarios, and improvement in decision quality for complex regulatory environments. These optimizations deliver competitive advantages that general tools cannot provide.

Strategic transformation represents the shift from reactive operations to proactive, insight-driven decisions. By embedding intelligence directly into industry-specific workflows, organizations achieve faster execution, smarter resource allocation, and continuous improvement across business operations. This transformation enables companies to lead in complex, regulated, and data-rich environments where generic AI solutions fall short.

Data-as-a-Service and Monetization Models

Data Versioning and Quality Standards

Data-as-a-service monetization requires robust versioning systems that track data evolution and maintain quality benchmarks. Companies implementing data monetization strategies must establish clear data lineage protocols that document data sources, transformations, and update cycles. This ensures that downstream applications and AI systems can reliably access consistent, high-quality datasets.

Quality standards encompass data completeness, accuracy, and freshness metrics that directly impact monetization potential. Organizations need to implement automated quality checks that validate data integrity before making it available through APIs or service offerings. These standards become particularly critical when data insights are sold externally, as poor quality can damage customer relationships and revenue streams.

Usage-Based API Terminology

The shift toward usage-based API monetization models introduces specific terminology that SaaS founders must understand. Rate limiting, throttling, and quota management become essential concepts for controlling data access and ensuring fair usage across customer tiers. API consumption metrics drive pricing models, where customers pay based on actual data queries, processing volume, or insight generation frequency.

Token-based authentication and metered billing systems enable granular control over data access rights and usage tracking. Understanding concepts like burst capacity, sustained throughput, and overage charges helps founders design pricing strategies that align with customer value creation while protecting infrastructure costs.

Industry Intelligence and Benchmarking

Data monetization opportunities often emerge through industry intelligence services that aggregate and anonymize operational data across customers. Companies can leverage aggregated insights to offer benchmarking services, helping clients understand their performance relative to industry standards. This approach transforms internal operational data into external revenue streams while maintaining customer privacy.

Successful benchmarking services require sophisticated data aggregation techniques that preserve individual customer confidentiality while generating meaningful comparative insights. The ability to provide industry-specific intelligence creates additional value layers beyond raw data access.

AI-Ready Dataset Specifications

AI implementation success depends heavily on properly formatted and structured datasets that meet machine learning requirements. AI-ready specifications include standardized data schemas, consistent labeling conventions, and preprocessing pipelines that ensure data compatibility across different AI models and frameworks.

Dataset specifications must address feature engineering requirements, training-validation splits, and metadata documentation that enables effective model development. Companies monetizing data for AI applications need to understand concepts like data drift detection, feature store management, and model performance monitoring to maintain dataset value over time.

AI Governance and Enterprise Compliance

Model Transparency and Auditability

Effective AI governance enterprise compliance begins with establishing comprehensive model transparency and auditability mechanisms. Organizations must implement systematic tracking of data sources, model assumptions, training methodologies, and evaluation processes to maintain visibility across the entire AI lifecycle. This transparency requires centralized metadata management and audit trails that make operational details visible and verifiable across teams.

Governance tools that centralize access policies and provide unified data governance solutions support this transparency by standardizing documentation practices. Teams should establish clear documentation requirements that include model versioning, lineage tracking, and decision-making processes to enable thorough audits when issues arise. This systematic approach ensures that AI systems can be traced and validated, supporting both internal oversight and regulatory compliance requirements.

Model transparency also extends to establishing interpretability mechanisms such as execution graphs, confidence scores, and traceable reasoning chains. These technical safeguards enable both technical and non-technical stakeholders to understand AI-driven outputs, which is particularly critical in regulated industries where accountability and trust are paramount.

Data Lineage and Tracking Systems

Data lineage represents a foundational component of AI governance, providing organizations with the ability to trace data flows from source to model output. Implementing robust lineage tracking requires systematic documentation of data transformations, pipeline processes, and access controls throughout the AI development lifecycle. This tracking ensures reproducible pipelines and transparent transformations that can be monitored over time.

Effective data lineage systems integrate directly with operational infrastructure to provide automated tracking capabilities. Organizations should implement unified catalog solutions that enforce lineage requirements while maintaining metadata for risk assessment and auditability purposes. These systems enable teams to identify data quality issues, assess the impact of changes, and maintain compliance with governance standards.

The implementation of strong data engineering practices supports reliable lineage tracking by establishing controlled data foundations with well-governed access patterns. Teams must ensure that schema enforcement, controlled access mechanisms, and versioning capabilities are embedded within their data infrastructure to prevent downstream transparency and reproducibility challenges.

AI Decision Documentation Requirements

AI decision documentation serves as a critical component for maintaining accountability and supporting audit requirements across enterprise environments. Organizations must establish comprehensive documentation standards that capture model development decisions, risk assessments, and mitigation strategies implemented throughout the AI lifecycle. This documentation provides the foundation for regulatory compliance and internal governance oversight.

Structured documentation should include detailed risk assessments covering potential issues such as bias, model drift, data leakage, and unsafe outputs. Teams must document mitigation strategies and controls implemented to address identified risks, creating transparency that supports both regulatory and internal audit requirements. This documentation becomes essential when organizations need to demonstrate compliance with evolving AI governance frameworks and regulatory expectations.

Documentation requirements should extend to ongoing monitoring and evaluation processes that track model performance, assess data drift, detect bias, and confirm policy compliance. Regular documentation of model reviews by cross-functional teams helps organizations maintain current records of governance decisions and supports proactive adjustments to policies and processes as conditions evolve.

Procurement and Evaluation Criteria

Establishing clear procurement and evaluation criteria for AI systems ensures that governance requirements are embedded from the acquisition phase through deployment. Organizations must develop structured evaluation frameworks that assess potential AI solutions against established governance standards, compliance requirements, and risk management criteria. These frameworks should align with organizational risk tolerance levels and regulatory obligations.

Evaluation criteria should encompass technical capabilities, security features, transparency mechanisms, and compliance alignment with relevant frameworks such as the EU AI Act or NIST AI Risk Management Framework. Organizations must assess whether potential AI systems provide adequate audit trails, explainability features, and integration capabilities with existing governance infrastructure.

The procurement process should include assessment of vendor governance practices, data handling procedures, and ongoing support for compliance requirements. Teams should evaluate whether AI vendors provide necessary documentation, training, and support structures to maintain governance standards throughout the system lifecycle. This comprehensive evaluation approach ensures that procured AI systems align with enterprise governance objectives while supporting scalable and compliant deployment across organizational contexts.

Advanced Interface Technologies

Multimodal AI Integration Terms

Multimodal AI agents represent comprehensible information-processing systems capable of analyzing data of different types and structures, going beyond single-input AI models restricted to text or images alone. These systems combine multiple modalities to create broader context, increased flexibility, and greater effectiveness in responses. For SaaS founders building scalable products, understanding multimodal fusion techniques becomes crucial, these include early fusion (combining raw data inputs at initial stages), late fusion (processing each modality independently before merging results), and hybrid fusion (integrating features at multiple points for optimal performance).

Cross-modal attention mechanisms enable agents to concentrate on important frames of each data stream, allowing context from one mode to improve interpretation in another. This proves essential for applications requiring simultaneous analysis of multiple sources, such as video commentary or image descriptions with speech. The training paradigms involve contrastive learning, where models learn to map paired and unpaired samples, improving correlation identification between modality types and enhancing understanding of modal interactions.

Key architectural components include transformer-based frameworks like CLIP and DALL-E, which process various data forms and produce high-impact results by associating text and images. Vision + LLM systems particularly stand out, enabling interpretation of images with narrative explanations, answering questions about visual scenes, and performing grounded reasoning tasks.

Voice-Activated SaaS Concepts

Voice identification technology is revolutionizing user authentication and interaction patterns in SaaS applications. Voice ID systems create unique voice prints for secure and quick authentication, enabling users to access services through natural speech patterns while maintaining security protocols. This technology allows users to request services, transfer data, or voice complaints through simple voice commands.

Natural language processing (NLP) integration with voice interfaces creates more intuitive user experiences by understanding context, emotions, and intent behind voice commands. The synchronicity between voice and other interaction modes, such as touch events, enables seamless user activities across different device types.

Advanced voice-activated systems incorporate intelligent assistants that foster personal connections through natural language-based interactions, allowing users to express different emotions non-verbally and receive contextually appropriate responses. These systems can identify individual speakers and provide relevant information accordingly, making them particularly valuable for multi-user SaaS environments.

Vision AI and Document Processing

Vision AI systems are being enhanced through multimodal integration to become more context-aware, accurate, and actionable. Vision + Text combinations allow systems to interpret images with narrative explanations, answer questions about visual scenes, and execute tasks based on combined text and image inputs. For example, vision + LLM systems can detect defects in manufacturing processes and generate natural-language explanations for root causes.

Vision + Audio integration adds contextual layers by detecting visual anomalies with supporting audio cues like alarms or sirens, improving situational awareness in surveillance and safety systems. Vision + Sensors (IoT + Edge) enables physical intelligence for real-world decision-making by incorporating movement, pressure, temperature, and location data alongside camera feeds.

Multimodal fusion techniques in vision AI help correlate image regions with textual meaning, improve object recognition, reduce hallucinations, and handle ambiguity and incomplete inputs. Enterprise benefits include higher accuracy, faster decisions, cross-context understanding, and deeper multimodal reasoning capabilities. These improvements are particularly valuable for document processing applications where text extraction, layout analysis, and content comprehension must work together seamlessly.

Context-Aware System Design

Context-aware systems leverage streaming pipelines, unified context memory, and low-latency multimodal inference stacks to enable continuous and adaptive agent behavior. These systems apply multimodal filtering, cross-modal consistency checks, and policy-driven validation across images, text, and sensor data to ensure safety and reliability.

Data modules collect, store, and process information to generate personalized and context-based responses while maintaining user profiles and synchronizing data across modalities. The interaction manager optimizes user and system interaction by selecting the best modality considering the context of use and device capabilities, ensuring seamless transitions between different interaction modes.

Application logic defines system responses to user input and governs behavior through algorithms that identify and resolve problems to prevent data loss while ensuring tailored user experiences. Different modes of interaction, including speech recognition, touch interfaces, and gesture recognition, are combined based on user preferences and context, offering flexibility and enhanced user journeys.

User feedback mechanisms remain vital, providing textual feedback to hearing-challenged users or visual cues to ensure input registration. By embracing these components, SaaS founders can develop multimodal interface systems that provide competitive advantages through enhanced accessibility, convenience, and user satisfaction.

Conclusion

The AI landscape for SaaS in 2026 demands more than surface-level integration, it requires foundational readiness. From understanding essential terminology and infrastructure requirements to implementing workflow orchestration and governance frameworks, successful SaaS founders must approach AI strategically rather than experimentally. The shift from feature velocity to infrastructure rebuilds, the rise of vertical AI applications, and the evolution of data-as-a-service models all point to one reality: AI is becoming the invisible backbone that powers scalable, reliable products.

The companies that thrive won't be those with the flashiest AI demos, but those whose systems can handle AI at scale repeatedly, securely, and profitably. As budgets shift from AI experiments to AI readiness, and as workflow orchestration becomes the control plane for intelligent applications, mastering these AI terms and concepts isn't optional, it's essential for building products that can compete and scale in an AI-first world. The foundation you build today determines whether your SaaS platform becomes AI-ready infrastructure or gets left behind by those who understood the importance of intentional, well-architected AI adoption.

About the author

Author Name:

Parth G

|

Founder of

Hashbyt

I’m the founder of Hashbyt, an AI-first frontend and UI/UX SaaS partner helping 200+ SaaS companies scale faster through intelligent, growth-driven design. My work focuses on building modern frontend systems, design frameworks, and product modernization strategies that boost revenue, improve user adoption, and help SaaS founders turn their UI into a true growth engine.